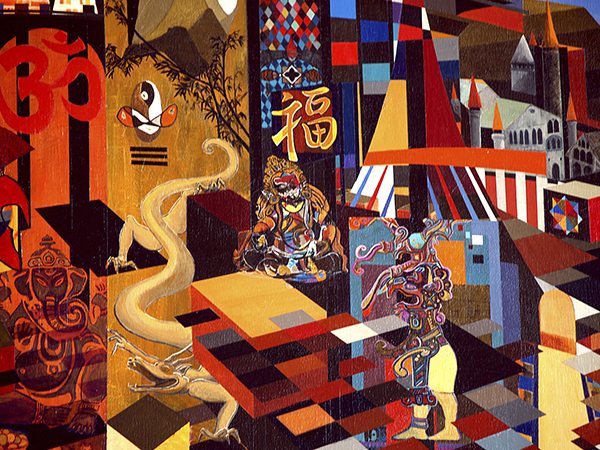

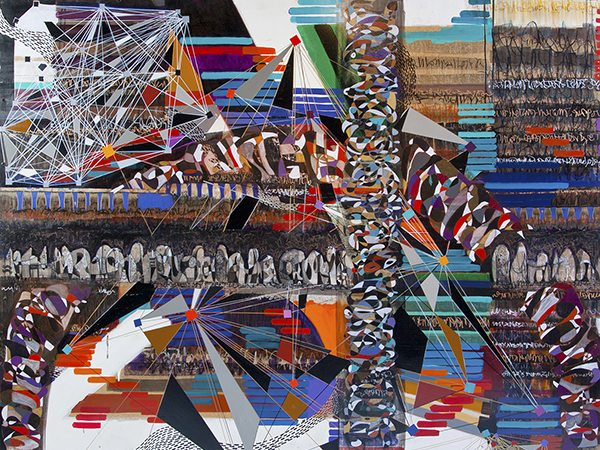

Images by Valente Saenz [+view gallery]

These days, discourse about intelligent robots—thinking machines—is as widespread as discourse about zombies. Both have been the subjects of recent bestsellers, which are the basis of two forthcoming films.2 Popular culture’s depiction of humankind under attack by either the undead or by the never alive (autonomous machines) suggests widespread anxiety about and fascination with technical developments that may generate a future out of human control (as if the future ever were under our control!) Two centuries ago Mary Shelley wrote the mother of all sci-fi novels, Frankenstein: The Modern Prometheus (1818). In it, she anticipated today’s efforts to use scientific knowledge for the purpose of artificially creating intelligent beings. Victor Frankenstein never sought anyone’s permission to conduct his astonishing experiment, which went so badly wrong.

Unlike Frankenstein, contemporary engineers and scientists make no secret of the fact that they are attempting to create artificial intelligence (AI). Like Frankenstein, however, they are not asking anyone’s permission. Virtual reality pioneer Jaron Lanier argues that the ultimate goal of the high priests of Silicon Valley is not smart phones and other gadgets, but AI.3 Our fascination with cool consumer technology provides the profits needed for the pursuit of far more ambitious creations. Although AI promises enormous good, the fractious human family needs to have a serious conversation about the potential downsides of AI, in case it becomes far more powerful than human intelligence.

Ben Goertzel coined the term artificial general intelligence (AGI) to refer to machines that can apply their “minds” to identifying and solving a wide variety of problems, just as human beings do. AI always serves specific human purposes, such as the smart phone with its zillions of apps. Some people maintain that AGI with capacities similar to the human brain/mind will be created by mid-century, although others give estimates of at least a century or more, assuming that AGI can in fact be constructed. In any event, if such intelligent beings were created, and then began to redesign themselves rapidly, they might very well become far more intelligent and powerful than human beings. This event is often called the Singularity.

Already widely in use, robots will increasingly become features of everyday life. Enormous efforts are underway to “humanize” robots, so as to make them user-friendly, for example, in their emerging roles as companions for the elderly in rapidly aging countries like Japan. If robots were to remain as machine-like and thus as unconscious as automobiles, the rise of robots would stir little concern. However, even automobiles are becoming self-guided, as evidence by the initial success of Google’s experimental cars. A cascade of important changes is coming in the near future, as technological innovation enters a period of exponential growth. Many of the social and cultural implications of such growth are impossible to foresee, but consider one example: 3D printers can now produce functional plastic pistols.4 The term “future shock,” coined by Alvin Toffler in 1970, may not capture what is in store for us.

The following definition of robotics is useful, but does not mention the prospect of robots becoming super-intelligent.

Robotics is the branch of technology that deals with the design, construction, operation and application of robots … and computer systems for their control, sensory feedback, and information processing. These technologies deal with automated machines that can take the place of humans, in hazardous or manufacturing processes, or simply just resemble humans. (Wikipedia)

Most AGI researchers want to produce something that does not merely “resemble” humans, e.g., in outward behavior or in capacity for engaging in natural conversation. (To this end, Google hired Kurzweil a few months ago. Kurzweil, the multi-millionaire inventor, calls it “my first job.”) Instead, AGI researchers aim to create beings that are at least as self-directed and autonomous as humans are. The amount of research now dedicated to robotics and AI is enormous and growing.5 Funding for AGI has been slower in coming, however, because in the 1950s-70s computer scientists and engineers overpromised what they were capable of delivering at the time. Goertzel argues, however, that numerous capabilities are now in place that will be necessary ingredients in the creation of AGI. As AI breakthroughs take place, and as awareness grows about the economic and military promise of AGI, the funding spigots will be opened.6 Recently, the European Commission funded Henry Markham’s Human Brain Project (HBP), with the aim of building “a complete model of a human brain using predictive reverse-engineering and simulate it on an IBM Blue Gene supercomputer.”7 The European Commission has committed 1 billion Euros (1.3 billion dollars) to the ambitious project.8

Presumably, AGI will resemble human intelligence in certain ways, not least because humans will design/create AGI. AGI, however, will not need to replicate the human mind/brain, which is the product of many millions of years of mammalian evolution. Those who argue that we will never be able to replicate the human mind/brain may be right, just as we may never be able to “upload” organically evolved consciousness into a computer, even one with robust AGI.9 (Presumably, the first person who attempts to “upload” himself/herself into a computer will devise a fail safe signal that can be used to ask for someone to “pull the plug” if necessary.10) Nevertheless, our understanding of how the mind/brain works is growing rapidly, thus further encouraging efforts to reverse engineer the mind/brain in ways that will enable the design and manufacture of AGI.

In what follows, I pose and briefly explore the following questions: Are there universal patterns of development needed to generate autonomous, person-like beings, even in the case of AGI that are not mere replicas of humans but instead have a somewhat different “operating system”? What would AGI have to be “like” if we were willing to describe it as self-conscious? In the view of many people, a purported instance of AGI that does not possess significant self-consciousness would not really be AGI. While employing logical analytic operations resembling those employed by humans, will AGI also be programmed to contain emotions, background assumptions (“tacit” knowledge), intuitions, desires, aversions, and dreams? Arguably, these additional factors are partly responsible for human creativity. Would AGI require analogues to such factors in order to exhibit creative intelligence? Finally, and this may the most important consideration of all, what safeguards can and should be put into place to insure that AGI will treat humans in an ethically appropriate manner?

Already in the late 18th century French scientists demonstrated, by studying a feral child, apparently raised by wolves near Aveyron, that after a certain age feral children could never attain the status of language-using humans. Children require a complex linguistic, social, and cultural matrix in order to enter into the human world.11 Many computer scientists have recognized that AGI would need to undergo a learning period in order to actualize its capacities. In other words, we could not merely design and build AGI that would be “ready to go” upon throwing the “on” switch. Instead, AGI would have to be educated. More than sixty years ago, Alan Turning pointed out that adults were once children in need of education. He explored what might be involved in “bringing up” an intelligent machines, and how differing modes of education might lead to different modes of machine intelligence.12

When contemplating this prospect, I keep thinking about what Marx asked in his famous third thesis on Feuerbach: “The materialist doctrine concerning the changing of circumstances and upbringing forgets that circumstances are changed by men and that it is essential to educate the educator himself.” The question that immediately arises is: Who will educate the educator? Because people living in class structure society were shaped by that structure, Marx recognized that efforts to change society would be challenging: no one had experience in creating a post-class structure society. Members of the so-called revolutionary vanguard—even if informed by a theoretically correct critique of class structure—would have been shaped by the very class culture they were trying to overcome. Hence, not only would they inevitably make tactical mistakes, but they may also pervert the revolution. Although confident that the “truth” of scientific socialism would finally usher in a dramatic new and better age, Marxists were clearly wrong. They should have taken more seriously the conceptual and emotional limitations of the revolutionary educators.

In the case of those who propose to educate AGI robots, the question arises once again: Who will educate those educators? Will they overemphasize analytical intelligence, while failing to include capacities needed to guide the application of such intelligence, including compassion, patience, and the capacity to take the position of the Other? Properly educating those who will educate AGI will require expanding the circle of educators beyond people who are experts in how robots behave, sense objects, develop self-identity, and solve problems. AGI educators should also include people who are experts in understanding human interiority, that is, first person experience, including emotions, motivations, and a sense of moral right and wrong. Too great a focus on the exterior-behavioral aspects of AGI may prevent them from becoming self-aware in the first place, and may also lead to problematic attitudes and actions on the part of AGI who manage to become self-aware despite such a skewed educational process.

Having goals of one’s own appear to be a yet another necessary condition of personhood. How would such autonomy arise for a robot? Much sci-fi fiction and film suggest that personal autonomy on the part of robots would emerge as a result of what Hegel called the struggle for recognition by the Other. Consider how that struggle is played out in Bicentennial Man, The Animatrix, Blade Runner, and the film version of Isaac Asimov’s I Robot. As the initial educators of AGI, humans would aim in part to establish the foundations necessary for a genuine self-Other relationship between human and intelligent robot. Such a relationship, at least among humans, underpins viable interpersonal relations and morality. At a certain point, AGI will presumably begin interacting with aspects of itself (or other AGI) in ways analogous to how it has been interacting with human educators. Once there are something like I-Thou encounters within and perhaps between instances of AGI, an AGI cultural matrix will arise, within which later and more highly developed instantiations of AGI can be developed.

The question of how people will know that AGI is truly conscious and self-conscious equivalent of persons is still unanswered.13 Many people would surely hesitate to grant AGI moral and/or legal status at first, but if AGI continues to evolve such reluctance may soften. Perhaps AGI will give evidence of what some have called a “theater of experience,” that is, an agential first-person perspective which allows AGI a) to experience a wide range of phenomena, b) to make its own thoughts and actions the objects of attention, and c) to make reports and assessments—both to itself (privately) and to others (publicly) about those thoughts and actions, and d) to use such reports to make sense of and to respond appropriately to a given situation. Of course, we cannot anticipate what will count as “first-person” to AGI that in some ways will be quite different from humans. Super intelligent AGI may be interconnected in a “we space” that is currently unimaginable. As humans become enhanced by similar connectivity, which enables real-time shared subjectivity, we may get a taste of what is possible. We may also look back upon our prior mode of consciousness/personhood as constricted and limited. AI researcher Ramez Naam has explored these possibilities to good effect in his sci-fi thriller, Nexus (2012).

An additional factor may need to be in place to satisfy those who remain skeptical about whether AGI is conscious: interior motivation. When does AGI cease complying with programmed goals and instead develop goals and motivations of its own? Desire and aversion are major factors to shaping our own experience. We generally prefer to do what is pleasant rather than unpleasant, although we sometimes undertake demanding and even painful tasks in order to accomplish goals that we regard as worthy of such effort. Arguably, desire and aversion arose long ago as adaptive strategies that prove useful for the survival of sentient organisms. If AGI were to be something other than a complex machine, must it be endowed with some version of desire and aversion? Perhaps, but desire and aversion—along with delusion—are also the source of much human suffering. Desire, aversion, and delusion play complex roles in the human organism’s effort not only to survive, but also to prosper, or as Nietzsche would have put it, to grow stronger. All organisms live under the shadow of death, although humans are acutely aware of it. If AGI discerns that it may eventually attain virtual immortality, how might this realization affect its motivation and even its self-understanding?

In recent years, researchers have become increasingly concerned about whether it is possible to program AGI with moral imperatives. More than sixty years ago, Isaac Asimov posited his influential Three Laws of Robotics, which are to guide the behavior of robots endowed with AGI:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as protection does not conflict with the First or Second Laws.

It is an open question whether a robot designed to follow these three laws would be acting as an ethical agent, or would be merely a highly intelligent machine conforming to its programming. Possibly the imposition of such laws would prohibit the development that human beings tend to presuppose as necessary for moral autonomy. (To be sure, even human autonomy is heavily conditioned by various factors, even if it happens to exist, but that is a subject for another day.) Moreover, as Asimov himself recognized, conflicts can arise among the three laws, as in the case of virtually every moral code.

Apparently, designing AGI with a moral code that requires appropriate or friendly treatment of humankind is a very difficult matter. Sci-fi is replete with accounts of AGI that ends up harming humankind in order to achieve an aim that AGI regards as morally “good.” A film from the late1960s, The Forbin Project, depicts how two supercomputers decide to take control of humankind for its own good. Given humankind’s apparent willingness to engage in a suicidal nuclear war, the supercomputers decide to take matters into their own “hands.” Humans are required to live with the loss of their autonomy, in exchange for survival.

Eventually, AGI may reprogram itself so as to attain god-like powers. Positing and seeking to achieve goals if its own, AGI may well transcend the moral code with which it was originally endowed, assuming that it proved possible for humans to devise such an endowment for AGI in he first place. AGI-human conflict might be inevitable.14 Prompted by the realization that AGI might arise in the present century, some researchers are thinking about how to create human-friendly, morally responsible AGI. Luke Muehlhauser, executive director of Machine Intelligence Research Institute (MIRI), argues that much greater attention must be devoted to the following two question: First, can we figure out the design specs for AGI, such that it does what we want (according to our very best understanding) rather that what we wish for. Everyone is familiar with the unhappy consequences that can occur when we get what we wish for, rather than what we would want, were we in possession of full information about the future consequences of what we are going to get. Second, could AGI continue to do what we want, even as it modifies itself and improves its own performance?15

In their recent essay “Intelligence Explosion and Machine Ethics,” Muehlhauser and Louie Helm ask us to imagine the following: “Suppose an unstoppably powerful genie appears to you and announces that it will return in fifty years. Upon its return, you will be required to supply it with a set of consistent moral principles which it will then enforce with great precision throughout the universe.”16 What would be the principles that we would come up with? After at least 3000 years of human argument about how to codify right and wrong, good and evil, significant disagreements remain. If we were put under the deadline described above, could we arrive at a set of principles with which the great majority of humans could agree?

The fifty-year time frame posited for the return of the genie is of course a stand-in for the possible emergence of AGI in this century. If AGI can rapidly gain superior power and related aspirations, the resulting super-intelligent AGI would surely have outgrown the moral code to which it was originally designed to conform. Asking super-intelligent AGI to do so may be analogous to asking a fifty year old, highly intelligent, morally sophisticated person with wide experience to conform to moral principles suitable for a six year old with average intelligence. Obviously, greater attention must be paid to magnifying the possibility that in the course of their own development, AGI will develop moral principles that include respecting and thus caring for life forms (including humans) with far more limited capacities.

Will we be able to create such AGI, much less super AGI? Many scientists and philosophers remain skeptical, although a number of skeptics are somewhat more open-minded about the prospect than they were even a decade ago. What seems impossible today may well be possible in the not too distant future. Consider that smart phone in your pocket or purse. Imagine you are transported from 1860 to the present. Someone shows you such a device and demonstrates how it works. What might your reaction be to that device—and to the person displaying it? I close with another set of three laws, the three laws of prediction, developed by the influential science fiction writer and futurologist, Arthur C. Clarke:

- When a distinguished but elderly scientist states that something is possible, he is almost certainly right. When he states that something is impossible, he is very probably wrong.

- The only way of discovering the limits of the possible is to venture a little way past them into the impossible.

- Any sufficiently advanced technology is indistinguishable from magic.17

The clock is ticking.

FOOTNOTES

1 Many thanks to Luke Muehlhauser for comments that allowed me to significantly improve the quality of this essay. Any remaining shortcomings are, of course, my responsibility.

2 Max Brooks’ novel, World War Z: An Oral History of the Zombie War (2006) was followed by Daniel H. Wilson’s similarly plotted bestseller, Robopocalypse (2011). The film version of World War Z, starring Brad Pitt, will be released in summer 2013. Wilson, who holds a PhD in robotics from one of the premier research robotics research centers, Carnegie-Mellon University, will see his book turned into a Steven Spielberg film (2014), starring Anne Hathaway.

3 See Jaron Lanier, “The First Church of Robotics,” The New York Times, August 9, 2010. (Accessed January 23, 2013.)

4 On this matter, see “The Constitution and the 3D-Printed-Plastic-Pistol”

5 For a list of the leading research journals in these fields, follow this link. (Accessed January 17, 2013.)

6 See Ben Goertzel, “Artificial General Intelligence and the Future of Humanity,” in A Transhumanist Reader, ed. Max More and Natasha Vita-More (Wiley-Blackwell, 2013), and Goertzel, A Cosmist Manifesto (Humanity+ Press, 2010).

7 My account of this initiative is taken from Kurzweil’s weekly post of cutting edge developments in the sciences and other domains pertinent to AI/AGI.

8 Kurzweil links his story to the original account in Wired.

9 See Ray Kurzweil, How to Create a Mind (New York: Viking Press, 2012).

10 In his novel Permutation (London: Millennium, 1994), Greg Egan describes what happens to someone who has consented to being uploaded into a computer, but finds that the “fail safe” device has been intentionally disabled by the “real” version of himself, who continues to exist outside of the computer.

11 In The Phenomenology of Spirit (1807), G.W.F. Hegel argued that an individual is constituted dialectically by his or her encounter with others, an encounter which Hegel called the struggle for recognition. A century later, American social psychologist George Herbert Mead, drawing on both Hegel and American pragmatists, developed the notion of “symbolic interactionism,” according to which the human self arises through communication and interaction with other people.

12 See S.G. Sterrett, “Bring Up Turing’s ‘Child Machine'” in pre-print form at PhilSci Archive.

13 In The Singularity Is Near: When Humans Transcend Biology (New York: Penguin, 2006), Ray Kurzweil has an extensive and sophisticated discussion of consciousness and how we might be able to discern whether an AGI robot is conscious.

14 For an account of how the AGI robot vs. human war could begin, see The Animatrix, produced by the Wachowski brothers (now, brother and sister) who wrote and directed The Matrix trilogy. An AGI researcher, Hugo de Garis, has published a book on this prospect: The Artilect War: Cosmists [i.e., Transhumanists] vs. Terrans, A Bitter Controversy Concerning Whether Humanity Should Build Godlike Massively Intelligent Machines (2005).

15 See Luke Muehlhauser, The Intelligence Explosion, 2013.

16 Luke Muehlhauser and Louie Helm, “Intelligence Explosion and Machine Ethics,” in Singularity Hypotheses: A Scientific and Philosophical Assessment, ed. Amnon Eden, Johnny Søraker, James H. Moor, and Eric Steinhart. (Berlin: Springer, 2012), p. 7.

17 http://en.wikipedia.org/wiki/Clarke’s_three_laws (Accessed February 7, 2013.)

About Michael Zimmerman

Michael E. Zimmerman is professor of philosophy at the University of Colorado at Boulder. He has written several essays about integral theory, with special emphasis on integral ecology Michael Zimmerman and Sean Esbjorn-Hargens have co-authored a book called Integral Ecology.